Navigating the government’s new project data standard

The government’s new programme and project data standard emphasises the need for robust and real-time data to inform project management, declares PlanRadar’s Rob Norton.

When it comes to monthly reporting, project managers in the public sector all know the drill. Extract data from three different systems, reformat it into a specific spreadsheet layout, and cross-check figures that never quite match. This produces a ‘reporting fatigue’ that has plagued infrastructure delivery for years, where high-quality information captured on site is often lost in translation as it moves up the chain.

The government’s Programme and Project Data Standard, launched in December last year by Government Project Delivery (GPD) and the National Infrastructure and Service Transformation Authority (NISTA), aims to break this cycle. By creating a common language for reporting risks, costs and milestones, the standard ensures project data no longer needs to sit in dozens of incompatible formats.

Beyond ‘data fragmentation’

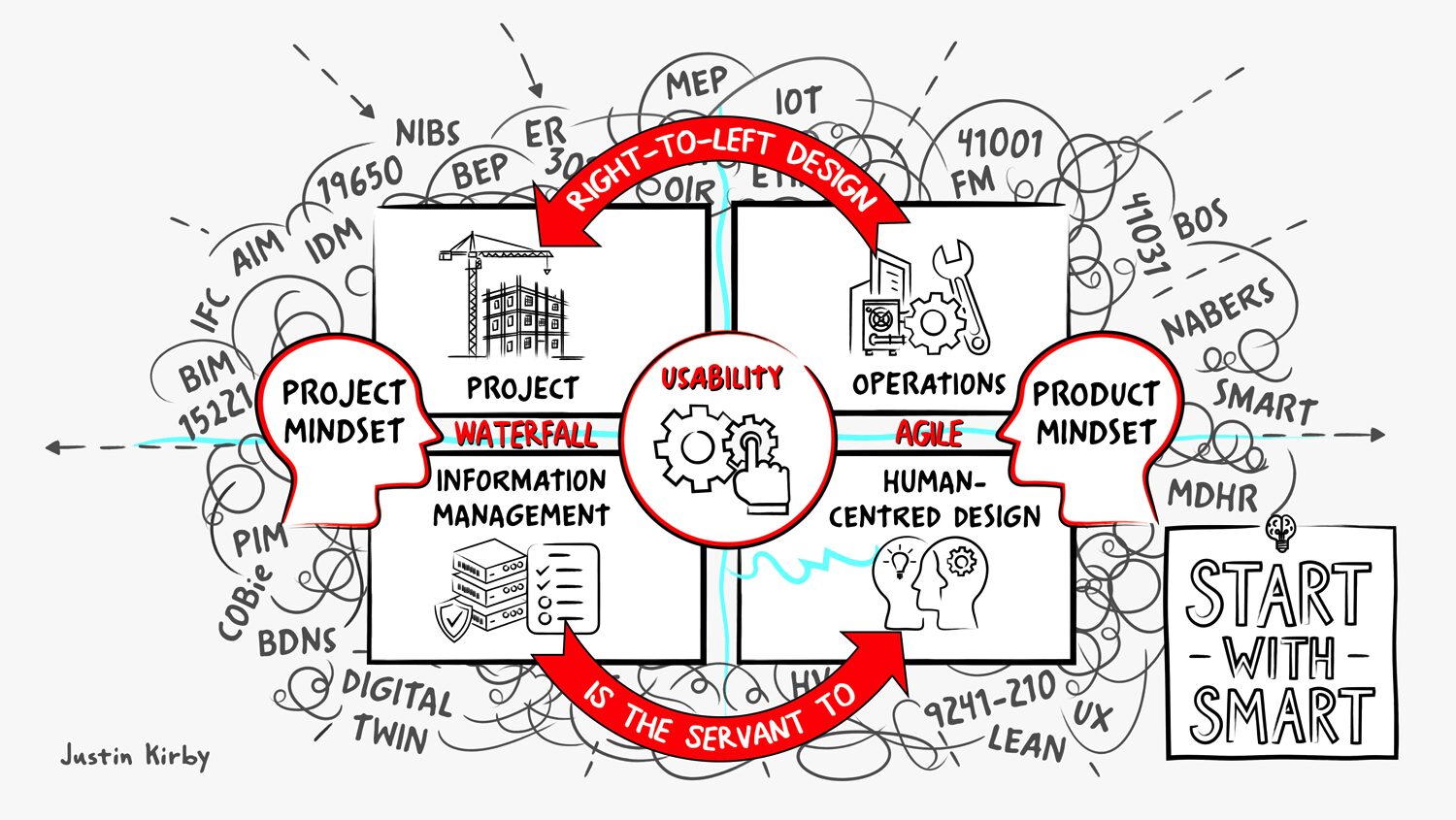

The standard is intentionally software-agnostic. The government is not mandating a single, rigid IT solution: it’s defining the actual data fields and formats that any platform should be able to export. This flexibility is critical for the construction industry, where teams need tools that work in the mud and on the scaffolding, not just back in the office.

However, the GPD handbook poses a pointed question to every leadership team: “To what extent does your current configuration support the expectations set by the standard?” Many will find their current ‘as-is’ status relies on retroactive data entry – where someone sits at a desk typing up notes from paper or emails days after the work happened. This is the antithesis of the ‘data hygiene’ required for the 2026 trial phase.

Empowering the data steward

“The ultimate goal of the standard is to enable better insights and decision-making. By capturing data at the source, real-time assurance becomes possible.”

The new mandate also clarifies the hierarchy of accountability. Roles such as senior responsible owners (SROs) and data stewards are now formally tasked with managing the quality of specific data entities. In this framework, anyone on a project team can act as a data steward.

To support these roles, we must adopt configurable platforms that make compliance ‘by design’. For example, when a site engineer logs a milestone or a risk on their tablet, a modern platform can automatically assign the correct Risk ID format and structure the data according to government requirements in the background. This transforms the data steward from a ‘data chaser’ to a ‘data verifier’.

The standard’s ultimate goal is to enable better insights and decision-making. By capturing data at the source, real-time assurance becomes possible. Digital signatures, time-stamped logs and onsite photographs linked directly back to quality records provide an audit trail that manual spreadsheets cannot match.

Instead of chasing information weeks after the fact, assurance teams can review progress as it happens, allowing problems to surface early enough to be fixed. This level of documentation is what ‘good enough’ looks like for the 2026 trial.

Make good use of the window

The 12-month trial period, running until 31 December, is a crucial window for organisations to audit their current data state and identify gaps. The clock is already ticking to build capacity before the second version of the standard is published in 2027 and full compliance becomes mandatory in 2029. I encourage project leads to start with one discrete pilot project. Test configurable digital tools that bridge the gap between site-level reality and high-level reporting.

Those who act now will find themselves better positioned for compliance and, equally important, more efficient, transparent project delivery overall.

Keep up to date with DC+: sign up for the midweek newsletter.