How to measure the ROI of AI

Struggling with a business case to justify investment in AI or AI-enabled processes? For those who missed his presentation at Digital Construction Week, Sammy Newman from Vinci Construction proposes a mindset by which to measure the return on investment (ROI) of AI.

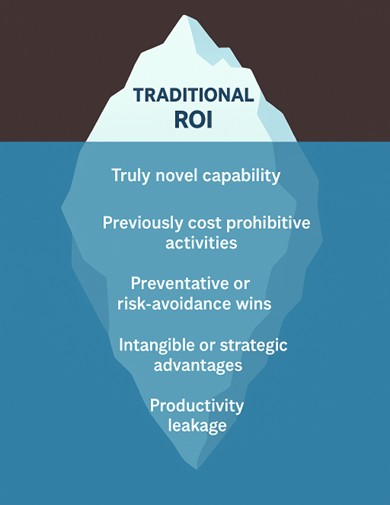

My interest in the ROI of AI in construction began with a simple problem: how do we value something previously impossible? Traditional ROI models are amazing for prioritisation, allowing us to allocate time and energy to high-impact activities. However, with AI, these novel capabilities are harder to value.

This led me to dive into leading industry reports to see how others track ROI. What I found is that there’s no one-size-fits-all approach. The framework covered gives food for thought. It should help you determine how you can track the ROI of your AI activities. AI isn’t going anywhere, and we need to focus limited resources on initiatives that provide genuine value.

Why don’t traditional ROI models work?

Traditional ROI works brilliantly when you’re polishing an existing process. But AI often tackles jobs that never had a ‘before’ state because they were too costly or technically out of reach. ROI relies on comparing past benchmarks with current performance, but what if there’s no past to compare to? It ignores strategic or transformative outcomes that don’t neatly fit into cost savings calculations. Perhaps the issue isn’t asking about ROI, but asking too early, narrowly or literally.

Here are some specific examples where traditional ROI models break down:

- Novel capability: AI summarising thousands of documents: there is no human baseline because no one could read that volume quickly enough.

- Previously cost-prohibitive activities: 24/7 site tracking with drones is theoretically possible with people, but the cost would be astronomical.

- Preventative wins: the accident that never happens. How do you calculate ROI on something that didn’t occur and attribute AI’s contribution? These intangible benefits are real, but complex.

- Strategic advantages – brand strength, talent magnetism: AI delivers long-term results gradually, transformative over time.

- Productivity leakage: when AI assists part of someone’s work, they don’t disappear; they move on to higher-value tasks. That value spreads across the organisation rather than neatly appearing in one metric.

The challenge is balancing tangible and intangible benefits, short-term gains and long-term transformations. Traditional ROI wasn’t built for this complexity.

What should we ask instead?

Some key questions help:

- What did we enable that we couldn’t do before?

- How quickly are we learning?

- Are we reducing uncertainty?

- Does this work support our strategic goals?

These questions acknowledge transformative value isn’t always immediate or purely financial. They help evaluate progress and make decisions even when traditional metrics fail. Portfolios of AI projects outperform single initiatives; internal capability building can be as valuable as immediate cash returns.

What’s the mindset shift needed?

First, not every benefit is immediately measurable, but it still matters. Capabilities built, cultural changes and lessons learned all add long-term value. Second, use multiple lenses – strategic, operational and cultural – each revealing different value.

Crucially, treat AI as a portfolio, not a silver bullet. Test, learn, then scale successes. No single project transforms a business; multiple initiatives do. Organisations seeing real transformation run multiple experiments, learn fast and build on successes. ROI isn’t the result of a single project; it’s the compound effect of an AI-enabled business model.

Here’s one example framework inspired by industry reports to categorise solutions across efficiency, quality, and transformation:

- direct capture: clear, measurable returns;

- distributed value: benefits spread organisation-wide (eg, productivity leakage);

- defensive value: risks avoided, problems prevented; and

- emergent value: unexpected benefits from AI adoption.

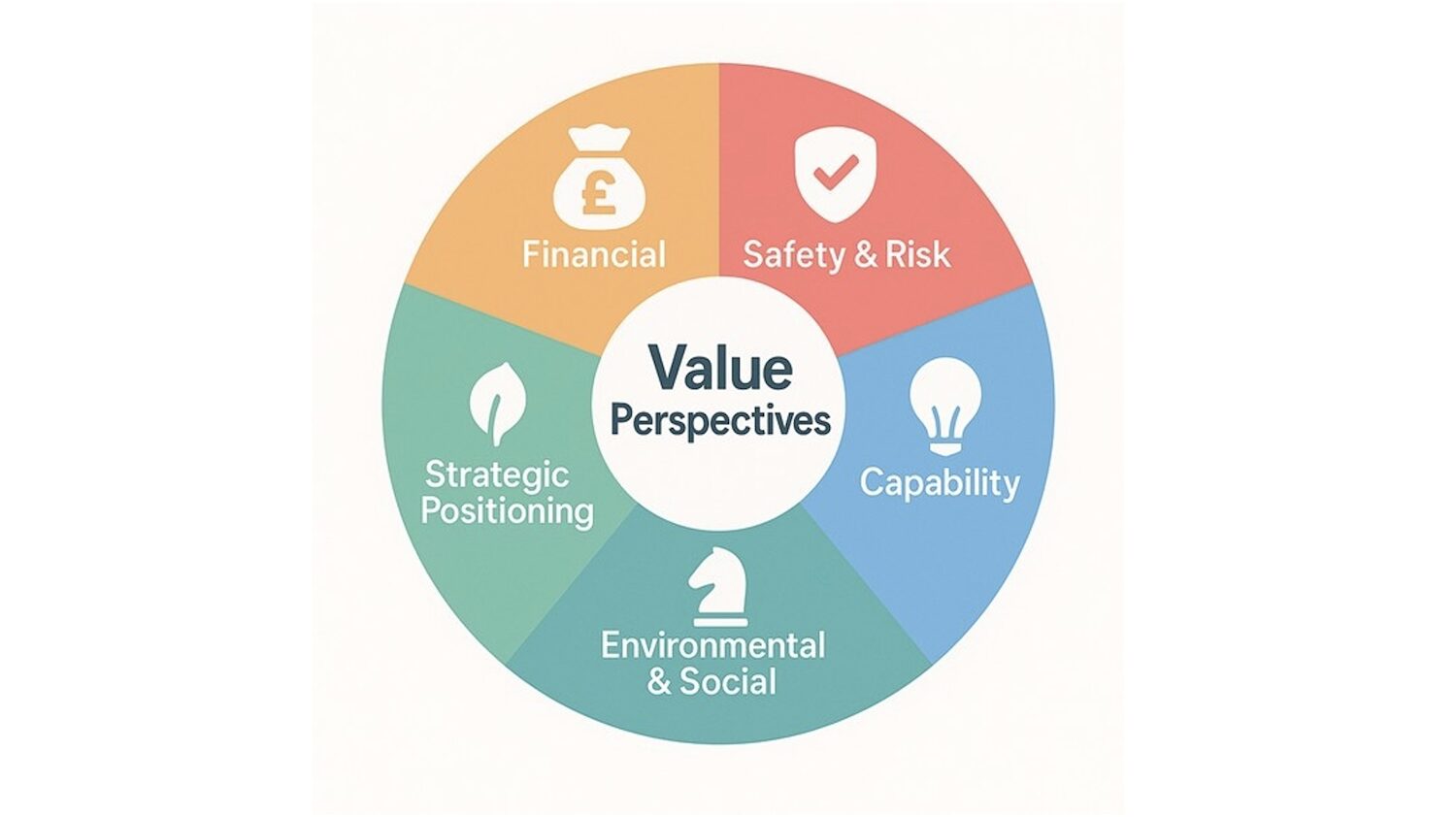

Consider evaluating AI initiatives through five complementary lenses:

- financial lens: ROI, operational efficiencies, reduced rework.

- safety and risk lens: prevented accidents, improved worker wellbeing, enhanced schedule reliability.

- capability lens: newly possible activities, knowledge assets, organisational learning.

- environmental and social lens: sustainability gains, improved client satisfaction, positive community and workforce impacts.

- strategic positioning lens: market position strengthening, talent attraction, competitive differentiation, creating future options.

By scoring each initiative across all lenses using a simple one-to-five scale, you can holistically compare projects. Something modest financially might still significantly impact safety or strategic positioning. Consistency matters: using uniform criteria lets you meaningfully compare projects to build a balanced portfolio.

Frameworks like this force you beyond immediate cost savings. They acknowledge that projects with low immediate returns may be highly valuable by building capabilities or creating future options. The key takeaway isn’t a specific framework, but the principle of evaluating value through multiple lenses. Ensure your approach captures more than traditional financial metrics.

Embracing the measurement mindset

Creating baselines for previously invisible activities turns every future AI tweak into measurable improvement, nudging construction into a data-driven era.

Measurement is progressive. Before launch, actual ROI cannot be measured because the activity didn’t previously exist. Use proxy early indicators to suggest the correct direction: more bids, or reduced contingency hours, for example.

Once live, become specific. For take-off AI, measure bids priced per month, changes in hit rate. For scheduling optimisation, track re-optimisation frequency and delay days avoided. For generative design, measure change orders and client satisfaction. Pick two or three lead metrics per pilot. Keep it simple. Focus on what indicates success for that specific case. That first baseline is gold. It turns every future iteration into measurable progress.

Keep up to date with DC+: sign up for the midweek newsletter.